Continuous deployment to Kubernetes with GitHub Actions

Two years ago I wrote an article titled Simple Kubernetes deployment

versioning to describe how I used bash and envsubst to

substitute image tags in my Kubernetes manifests with a unique tag for every deployment.

That worked reasonably well, but since then I have started to implement continuous deployment for most projects I work

on and my homemade solution quickly became obsolete.

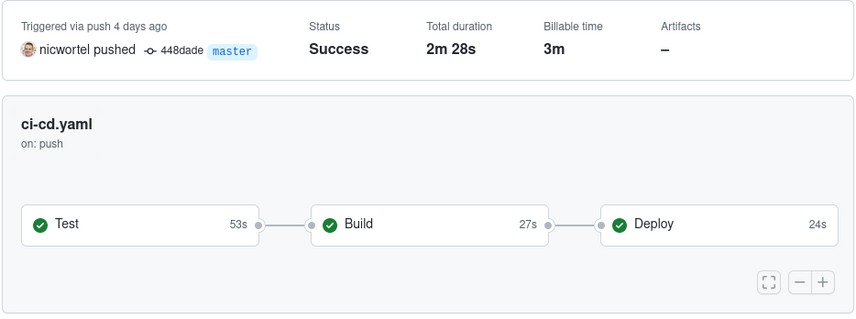

I now almost exclusively use GitHub Actions to deploy my applications

and in this article I will show how I set up CI/CD pipelines in GitHub Actions to test, build, push, and deploy Docker

images to Kubernetes on every push to the main branch.

GitHub Actions is GitHub's answer to CI/CD platforms such as GitLab CI, Travis CI and CircleCI.

GitHub Actions is free for public repositories and all GitHub plans come with an amount of free private build minutes

per month.

However, the killer feature of GitHub Actions are the reusable actions which you can use in your workflows and which

can replace verbose shell commands, making your workflows much easier to read and maintain.

For building and deploying my applications to Kubernetes I use open-source actions such as

docker/build-push-action and

azure/k8s-deploy which come with some features

to make life just a little bit easier.

And although being maintained by Microsoft Azure, the azure/k8s-deploy action works just as well on non-Azure

clusters.

I personally use this approach with a managed Kubernetes cluster from DigitalOcean

without any issues.

GitHub Actions workflows can be created by creating a YAML file in the .github/workflows directory in your Git

repository.

While the directory has to be .github/workflows, the file itself can have any name you like. I usually name it

ci-cd.yaml, build.yaml, or something similar.

In the root level of this file you can define the name of the workflow, the event that should trigger it, and the jobs

that are part of it.

# .github/workflows/ci-cd.yaml

name: CI/CD

on: push

jobs:

(...)

The on: push line specifies that this workflow should be triggered by pushing new commits to any branch. Later on we

will ensure that we only deploy to production if the commit is pushed to our main branch.

Running automated tests

Let's start with a job to run automated tests. You may already be running unit-, integration- or other tests in your existing CI pipeline, but with continuous deployment tests become even more important as you want to avoid deploying bugs that could have been detected by automated tests.

The exact set of testing tools depends on the kind of application and the programming language. I typically use a

combination of unit tests, integration tests, and system tests, as well as static analysis, code quality, and linting

tools.

In order to prevent "works on my machine" situations, I usually use Make for running automated

tests so that I can call make check both in my local

development environment and in my CI/CD pipelines, minimizing the differences between the two environments.

Depending on the type of tests being run, we might also need to start a database container, install dependencies, and run some database migrations. All together, testing a PHP/Symfony application might look like this:

name: CI/CD

on: push

jobs:

test:

name: Test

runs-on: ubuntu-latest

services:

database:

image: mysql:8.0

env:

MYSQL_ROOT_PASSWORD: password

MYSQL_DATABASE: database

ports:

- 3306:3306

steps:

- name: Setup PHP

uses: shivammathur/setup-php@v2

with:

php-version: '8.1'

- name: Checkout source code

uses: actions/checkout@v3

- name: Install dependencies with Composer

uses: ramsey/composer-install@v2

- name: Execute database migrations

run: bin/console doctrine:migrations:migrate --no-interaction

- name: Run tests

run: make check

As you can see, I'm using a few reusable actions here: shivammathur/setup-php to install a known version of PHP, actions/checkout to check out the Git repository, and ramsey/composer-install to install dependencies using Composer, PHP's dependency manager.

Building and pushing the Docker image

When the tests have passed, we can start building the Docker image based on our Dockerfile. In order to build and push

images only after the tests have passed, we'll add needs: test to the job configuration.

It's possible to omit that dependency so that the test and build jobs will run in parallel, which shortens the

duration of the whole workflow a bit. As long as the deploy job depends on the test job, a broken commit will never

be deployed.

To store Docker images, I use

the GitHub Container Registry

instead of Docker Hub.

The benefit of using GHCR (apart from a low price tag) is that GitHub automatically generates a

token (secrets.GITHUB_TOKEN) for

every workflow run which can be used to push and pull images from the container registry, removing the need to manually

create a secret containing a password for every repository.

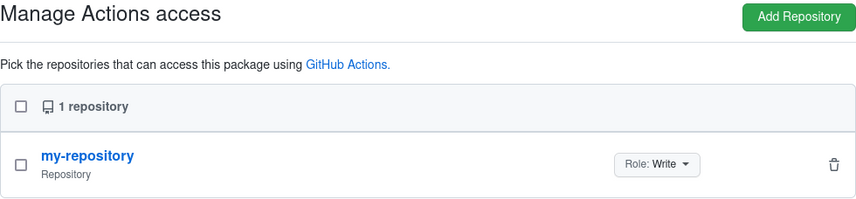

The scope of the token is limited to the repository the workflow is running in, so you have to first create the package

manually (by pushing an image), go to its Package settings, and add the repository with Role: Write under

Manage Actions access.

For building and pushing the image we'll use the docker/build-push-action. This action builds images using Buildx, which we'll install using docker/setup-buildx-action, and we'll need to login to a container registry using docker/login-action:

name: CI/CD

on: push

jobs:

test:

(...)

build:

name: Build

needs: test

runs-on: ubuntu-latest

steps:

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v1

- name: Login to GitHub Container Registry

uses: docker/login-action@v2

with:

registry: ghcr.io

username: ${{ github.repository_owner }}

password: ${{ secrets.GITHUB_TOKEN }}

- name: Build and push the Docker image

uses: docker/build-push-action@v3

with:

push: true

tags: |

ghcr.io/username/package:latest

ghcr.io/username/package:${{ github.sha }}

As you can see, we are passing two inputs to the action. push: true tells it to push the image to the container

registry after building it, and tags contains a tag or list of tags the image should be tagged with.

In this case the :latest tag is updated and a new, unique tag with the Git commit hash is created.

Note that by default, docker/build-push-action uses the Git context

so we don't need to checkout the repository in this job.

If you want to use Docker Hub instead of GHCR, omit the registry: ghcr.io line, remove the ghcr.io/ prefix from

image tags, create secrets containing your

Docker Hub username and password, and pass them to the username and password inputs of the docker/login-action:

- name: Login to Docker Hub

uses: docker/login-action@v2

with:

username: ${{ secrets.DOCKER_HUB_USERNAME }}

password: ${{ secrets.DOCKER_HUB_PASSWORD }}

- name: Build and push the Docker image

uses: docker/build-push-action@v3

with:

push: true

tags: |

username/package:latest

username/package:${{ github.sha }}

Caching image layers to speed up the build

Depending on the steps in your Dockerfiles, you might start noticing that it takes a lot of time to build your image, whereas this usually happens in a matter of seconds on your local machine. When building your image, Docker will create a layer for every instruction in your Dockerfile. When building the same Dockerfile again, Docker will only rebuild the layers that have changed, reusing the rest from its build cache. However, because GitHub Actions spins up a clean environment for every workflow run, the build cache will be empty and Docker has to rebuild your image from scratch every time.

To leverage the Docker build cache, the docker/build-push-action allows you to configure how it should import and

export its build cache.

In my experience, the bests results (especially when using

multi-stage builds) are achieved with the dedicated

cache exporter for GitHub Actions (type=gha). Note that this exporter is still

marked experimental,

but I've been using it for a few months now without any issues.

To enable the GitHub cache exporter, configure cache-from and cache-to in the build step:

- name: Build and push the Docker image

uses: docker/build-push-action@v3

with:

push: true

tags: |

ghcr.io/username/package:latest

ghcr.io/username/package:${{ github.sha }}

cache-from: type=gha

cache-to: type=gha,mode=max

Deploying the new image to Kubernetes

Now that we have built a Docker image and pushed it to our container registry, we can deploy it to Kubernetes. But before we can actually deploy our new image, we'll need to set up authentication and authorization so that GitHub Actions can trigger the deployment in our Kubernetes cluster, and Kubernetes can pull our new image from the container registry.

Creating the image pull secret

In order to pull private images, Kubernetes needs credentials to authenticate with the container registry, just as you

need to run docker login from your local machine to push and pull images.

These credentials have to be stored as a secret which can be referred to by the pod template via the

imagePullSecrets field.

If you have already set up the image pull secret in your application's namespace or if the image is public, you can skip

this step.

In your GitHub account settings, generate a personal access token with only the read:packages

scope.

This token will be linked to your personal account.

Note that while GitHub recommends giving the token an expiry date, that means that you will have to regenerate the token

and repeat the following step on expiration.

Click on the "Generate token" button to generate the token.

Then run the following command to create a secret named github-container-registry, replacing <namespace> with the

namespace of your Deployment object, <github-username> with your GitHub username, and <token> with the generated

personal access token:

kubectl create secret docker-registry github-container-registry --namespace=<namespace> --docker-server=ghcr.io --docker-username=<github-username> --docker-password=<token>

After successful creation of the secret, you can refer to it in your deployment.yaml:

spec:

template:

spec:

containers:

- name: container-name

image: ghcr.io/username/package:latest

imagePullSecrets:

- name: github-container-registry

Setting the cluster context to authenticate with the cluster

The next step is to allow GitHub Actions to manage resources in our Kubernetes cluster.

We'll create a service account named github-actions:

kubectl create serviceaccount github-actions

To authorize the service account to create and update objects in Kubernetes, we'll create a ClusterRole and a

ClusterRoleBinding.

Create a file clusterrole.yaml with the following contents:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: continuous-deployment

rules:

- apiGroups:

- ''

- apps

- networking.k8s.io

resources:

- namespaces

- deployments

- replicasets

- ingresses

- services

- secrets

verbs:

- create

- delete

- deletecollection

- get

- list

- patch

- update

- watch

This describes a ClusterRole named continuous-deployment with permissions to manage namespaces, deployments,

ingresses, services and secrets.

If your application consists of more resources which need to be updated as part of a deployment (for example cronjobs),

you should add those resources to the file as well (and don't forget the prefixes of their apiVersion to apiGroups).

Run the following command to apply the ClusterRole configuration:

kubectl apply -f clusterrole.yaml

Now, create a ClusterRoleBinding to bind the continuous-deployment role to our service account:

kubectl create clusterrolebinding continuous-deployment \

--clusterrole=continuous-deployment

--serviceaccount=default:github-actions

When we created the service account, a token was automatically generated for it and stored in a secret.

We'll need to inspect the service account to find the name of the associated secret, which will be listed

under secrets and starts with github-actions-token:

kubectl get serviceaccounts github-actions -o yaml

apiVersion: v1

kind: ServiceAccount

metadata:

(...)

secrets:

- name: <token-secret-name>

Using the name of the secret returned by the service account, retrieve the YAML representation of the secret:

kubectl get secret <token-secret-name> -o yaml

Create a new GitHub Actions secret named KUBERNETES_SECRET, and use the full YAML output of the previous kubectl get

secret command as the value for the GitHub Actions secret. Now we can use the

azure/k8s-set-context action to set the Kubernetes

cluster context based on the cluster's API server URL and the service account secret:

jobs:

test: (...)

build: (...)

deploy:

name: Deploy

needs: [ test, build ]

runs-on: ubuntu-latest

steps:

- name: Set the Kubernetes context

uses: azure/k8s-set-context@v2

with:

method: service-account

k8s-url: <server-url>

k8s-secret: ${{ secrets.KUBERNETES_SECRET }}

Replace <server-url> with the URL of the cluster's API server, which can be found using the following command:

kubectl config view --minify -o 'jsonpath={.clusters[0].cluster.server}'

Applying the manifest files

Now that all prerequisites are met, we can actually deploy our new image to the cluster.

We'll use the azure/k8s-deploy action to apply

the manifests.

This action takes a list of image tags as input and substitutes references to those images in the manifest files with

the specified tags before applying the manifests to the cluster.

This way we can commit the manifest files to version control with valid image tags such as

ghcr.io/username/package:latest, and replace them with a tag containing the Git commit hash right before deployment.

Even if no changes have been made to the manifest files, this will ensure that Kubernetes pulls the newest image and

replaces the running pods.

By applying not just the deployment manifest but our other manifests as well, we make sure that any changes to them are

applied as well.

Don't forget that the workflow will be triggered when pushing commits to any branch of the repository. To prevent

commits on a feature branch from being deployed to production, we will add a

condition to our deploy job

so it will only trigger on pushes to the master branch (replace this with main, develop, or whatever the name of

your main branch is).

jobs:

test: (...)

build: (...)

deploy:

name: Deploy

if: github.ref == 'refs/heads/master'

needs: [ test, build ]

runs-on: ubuntu-latest

steps:

- name: Set the Kubernetes context

uses: azure/k8s-set-context@v2

with:

method: service-account

k8s-url: <server-url>

k8s-secret: ${{ secrets.KUBERNETES_SECRET }}

- name: Checkout source code

uses: actions/checkout@v3

- name: Deploy to the Kubernetes cluster

uses: azure/k8s-deploy@v1

with:

namespace: default

manifests: |

kubernetes/deployment.yaml

kubernetes/ingress.yaml

kubernetes/service.yaml

images: |

ghcr.io/username/package:${{ github.sha }}

That's it! After committing and pushing our changes, GitHub Actions will automatically start building and deploying your new image. You can further expand this workflow, for example by sending Slack notifications on successful deployments, sending information about the new deployment to your application monitoring software, or even by spinning up an isolated environment for every pull request so you can review changes before merging a PR.